The mail server components: http://code.google.com/p/iredmail/wiki/Main_Components

The discussion forum: http://www.iredmail.org/forum/

1/Preliminary Note

In this tutorial

I use: Hostname mail.test.vn

admin account: Postmaster@test.vn

Mail domain: test.vn

Mail delivery (mailboxes) path: /home/vmail/domains

These settings might differ for you, so you have to replace them where appropriate.

Requirements

Install CentOS 5.x, I suggest to use the minimum install, make sure you don't install Apache, PHP and MySQL. You can remove them with yum if they are installed.Yum is working, because the installation needs to use CentOS source packages.

Installation

Download the iRedMail script:

wget http://iredmail.googlecode.com/files/iRedMail-0.4.0.tar.bz2

tar xjf iRedMail-0.4.0.tar.bz2

Run the script to download all mail server related rpm packages:

Only download packages not shipped within RHEL/CentOS iso files.

cd iRedMail-0.4.0/pkgs/

sh get_all.sh

Run the script to install:

cd ..

sh iRedMail.sh

Step1:welcome page

Step2:Mail delivery (mailboxes) path, all emails should be stored in this directory.

Step3:Choose backend to store virtual domains and virtual users.Note: Please choose the one you are familiar. Here we use MySQL for example.

Step4:Set MySQL account 'root' password.

Step5:Set MySQL account 'vmailadmin' password.Note: vmailadmin is used for manage all virtual domains & users, so that you don't need MySQL root privileges

Step5:Set MySQL account 'vmailadmin' password.Note: vmailadmin is used for manage all virtual domains & users, so that you don't need MySQL root privileges

Step6:Set first virtual domain. e.g. test.vn, botay.com, etc.

Step7:Set admin user for first virtual domain you set above. e.g. postmaster.

Step7:Set admin user for first virtual domain you set above. e.g. postmaster.  Step8:Set password for admin user you set above.

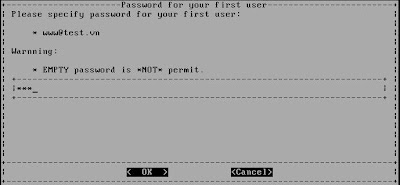

Step8:Set password for admin user you set above. Step9:Set first normal user. e.g. www.

Step9:Set first normal user. e.g. www. Step10:Set password for normal user you set above.

Step10:Set password for normal user you set above.  Step11:Enable SPF Validation, DKIM signing/verification or not.

Step11:Enable SPF Validation, DKIM signing/verification or not. Step12:Enable managesieve service or not

Step12:Enable managesieve service or not Step13:Enable POP3, POP3S, IMAP, IMAPS services or not

Step13:Enable POP3, POP3S, IMAP, IMAPS services or not Step14:Choose your prefer webmail programs

Step14:Choose your prefer webmail programs Step15:Choose optional components. It's recommended you choose all.

Step15:Choose optional components. It's recommended you choose all.  Step16:If you choose PostfixAdmin above, you need to set a global admin user. It can manage all virtual domains and users.

Step16:If you choose PostfixAdmin above, you need to set a global admin user. It can manage all virtual domains and users. Step17:If you choose Awstats as log analyzer, you will be prompted to set a username and password

Step17:If you choose Awstats as log analyzer, you will be prompted to set a username and password

Step18:Set mail alias address for root user in operation system

Step18:Set mail alias address for root user in operation systemStep19:drink coffee :D and wait few minutes

Step20:reboot and enjoy

Step21:After reboot .Create Mailbox by postfixadmin

https://mail.test.vn/postfixadmin

After login,I choose Virtual list-> Add Mailbox

Step 22:Create Maillist

Step 22:Create MaillistI choose Virtual list-> Add Alias

Step23: Test configure and Account

Configure Account use outlook Express check mail

You can use webmail for check mail :https://mail.test.vn/mail/

Other you can create cluster mail server with replication mysql and can configure manual from http://www.postfix.org/